Content Delivery Networks (CDN) are typically most useful for sites with a widely dispersed audience, or those with high traffic. They serve static files from locations close to the user for faster response times and alleviate load from the main server. However a CDN can make small websites at the other end of the spectrum a lot faster too!

I recently built a simple one-page website for a client. It’s a minimal online presence to capture searches for their business name, provide some information and a contact form.

In theory even entry-level shared hosting should load a site like this very quickly. In practice it can be like a ballpoint pen – if it hasn’t been used for a while it’s slow to put ink on the page. Once it gets going it’s fine.

Low-traffic small business sites can effectively be ‘put the site at the back of the server’, like the ink that’s dried on the tip of a pen. If the site hasn’t been requested for several hours that first response can be a lot slower than it should be. Subsequent hits might be perfectly quick, but that doesn’t help with the first impression.

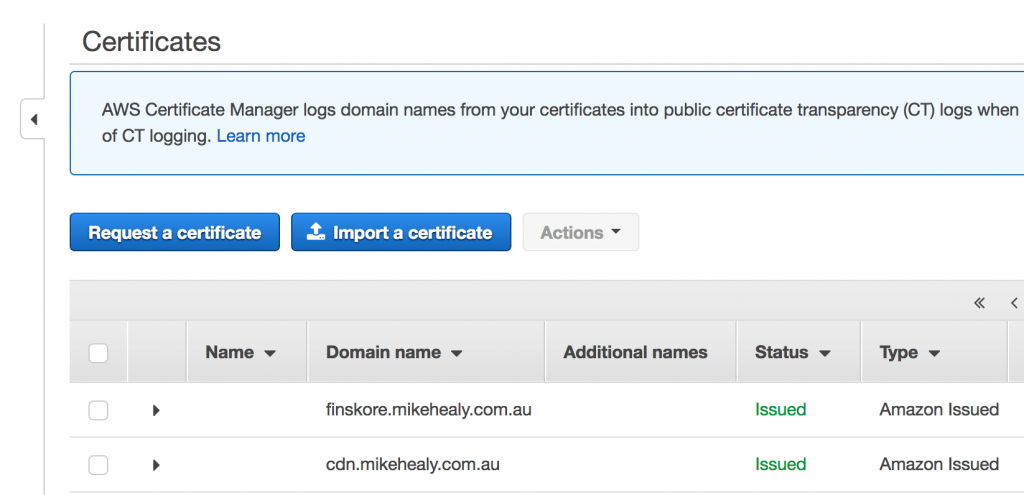

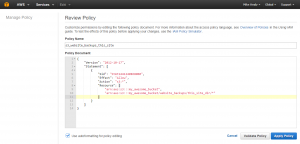

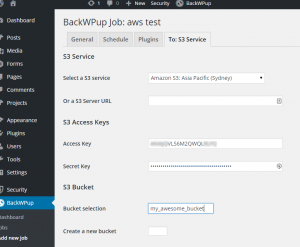

I was able to speed up this site considerably by using the traditional cPanel shared host as a CDN Origin, and route the domain through Cloudfront. Cloudfront acts as a fast cache, storing a rendered version of the page (and its supporting files) to respond quickly to sporadic visits.

This retains the flexibility of some basic server side rendering while massively speeding that first page load. The backend functionality required to run the contact form works via an Ajax request to the underlying cPanel server.

For simple sites this approach was a lot easier to develop (read cheaper) than a full static site generation approach, while still giving some scripting capabilities and the speed of a static site deployed to a CDN.