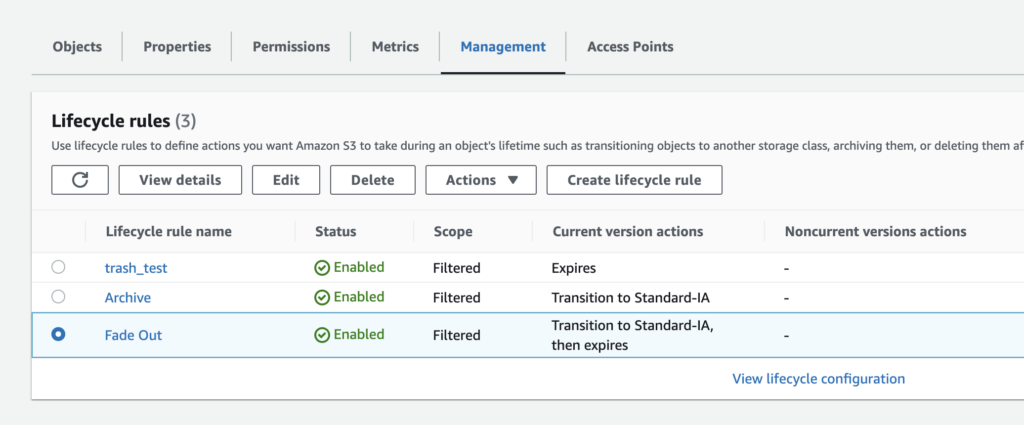

AWS S3 has a feature called Lifestyle Rules that let you define rules to run a range of transitions on your objects, such as changing their storage class or expiring (deleting) them.

This can be useful to save costs and clean up your object list by removing objects you don’t need to keep forever. You can lean on AWS for the expiry logic without having to program scheduled events to clean up for you.

Lifecyle rules can be targeted to an entire bucket, to objects with a path prefix, or to objects with a particular Tags.

Spatie Laravel Backup is a popular Laravel package for taking site/database backups.

It can be configured to write to various ‘disks’. If you’re using the S3 disk it is possible to Tag those backups for targeting by the Lifecycle rule.

Creating the rules in S3 and targeting a tag

In my case I will be targeting a tag called fadeout with a value of true.

This rule will transition objects to the Standard Infrequent Access storage class after 30 days, and then delete them after 60 days. It also only applies to objects over 150kB.

Tagging backups that Laravel Backup uploads

To have your backup objects tagged in order for the lifecycle rule to take effect you’ll need to an an option to your Laravel fileysystems.php config file.

Assuming you are using the s3 disk, you’ll need to add a backup_options value to your S3 config array like so:

's3' => [

'driver' => 's3',

'key' => env('AWS_ACCESS_KEY_ID'),

'secret' => env('AWS_SECRET_ACCESS_KEY'),

'region' => env('AWS_DEFAULT_REGION'),

'bucket' => env('AWS_BUCKET'),

'url' => env('AWS_URL'),

'backup_options' =>

['Tagging' => 'fadeout=true', 'visibility'=>'private'],

],

Backups are private by default, but because we’re overriding the options array we need to specify it again.

The format of the Tagging key is url encoded as shown.

The Tagging and visibility keys are case sensitive as shown.

With that in place you can keep your recent backups without cluttering your bucket 🙂